GPU power is a hot commodity, especially for deep learning applications. But simply throwing more hardware at the problem is not an efficient solution. First, companies must make sure they’re using their current GPU resources to their full potential. Why are deep learning applications a focal point for many organizations?

Deep learning models are crucial for automating and optimizing business operations, which is key to business profitability. This reality makes GPU optimization indispensable. As the GPU is optimized, the efficiency and speed of model training and inference correspondingly increase. Optimization thus has benefits like elevated performance, innovativeness, and business competitiveness. With that in mind, let’s consider a few strategies that organizations can use to optimize their GPU performance.

Optimizing GPU Usage – The Detailed Breakdown

Efficient parallelism.

Parallelism is when you use multiple processing cores of the GPU simultaneously to perform different tasks.

- Pros: Can significantly reduce the training time of models and GPU utilization.

- Cons: Managing parallel tasks may require additional management overhead and introduce complexities in code management.

Effective memory management.

With memory management, you can optimize the allocation and utilization of GPU memory.

- Pros: Ensures smooth model training and inferencing, avoiding out-of-memory errors and reducing bottlenecks, thereby improving overall productivity.

- Cons: Overcommitting GPU memory could lead to decreased performance due to constant data swapping between CPU and GPU memory.

Precision tuning.

Tuning is the adjustment in the precision of calculations, such as using mixed-precision training to fit your hardware and better solve your current problem.

- Pros: Achieve faster model convergence and reduce memory usage, ensuring efficient use of the GPU’s power.

- Cons: Reduced precision might impact the model’s accuracy, especially when high precision is essential.

GPU selection and scalability.

Choose the proper GPU based on memory, compute capability, and scalability.

- Pros: Leveraging multi-GPU setups or cloud-based GPU clusters, for example, enables handling larger models and datasets.

- Cons: Multi-GPU setups and cloud-based solutions can be expensive and may require sophisticated knowledge and setups to manage efficiently.

Optimization libraries and frameworks.

Use optimization libraries like cuDNN and high-performance frameworks to improve performance.

- Pros: Can lead to better GPU acceleration and enhanced performance.

- Cons: Some libraries and frameworks might have steep learning curves and may not support all deep learning models and algorithms.

Batch size optimization.

Adjust the batch size to fully utilize the GPU’s computational capacity.

- Pros: Can improve resource utilization, leading to faster model training and lower costs.

- Cons: Tuning requires precision as a large batch size might cause out-of-memory errors, while a small batch size might not fully utilize the GPU’s capacity.

Profiling and monitoring.

Regularly track, identify, and analyze GPU usage.

- Pros: Can help identify bottlenecks and inefficiencies, allowing for real-time adjustments and optimizations.

- Cons: Might require additional resources and tools to implement continuous monitoring and profiling effectively.

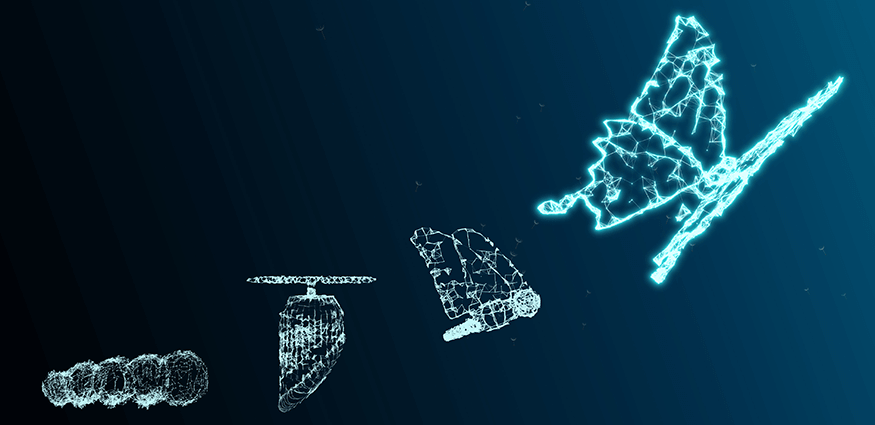

The Future of GPU Needs in Deep Learning

As deep learning models become more sophisticated and data-intensive, the demand for advanced GPU capabilities rises. The future of GPU requirements for deep learning is, therefore, being shaped by several foreseeable trends and developments.

- Enhanced computational power.

Future GPUs will likely need to handle more parallel processes simultaneously, fostering quick model training and real-time inferencing. - Innovative memory solutions.

The expansion of model sizes and the surge in data volumes will require innovative memory solutions, such as High Bandwidth Memory (HBM), to manage extensive data swiftly and efficiently. - Energy-efficient designs.

With sustainability becoming a core focus, companies will seek GPUs that can deliver optimal performance with minimal energy consumption. - AI-driven optimization.

Integrating AI-driven optimization techniques can potentially lead to self-optimizing GPU environments, where GPUs can automatically adapt and optimize their performances based on the model requirements and environmental conditions. - Customization and flexibility.

As deep learning applications become more diverse, companies will need the flexibility to tailor their GPU setups based on unique needs and applications. - Evolving programming models.

The future may bring novel programming models and paradigms that can exploit the full potential of GPUs, enabling developers to implement more sophisticated optimization strategies.

Work With a Hardware Partner That Understands the Nuances of AI

For computing infrastructure companies striving to stay ahead in the evolving tech landscape, optimizing GPU usage for deep learning is no longer a choice but a necessity. Part of the optimization equation involves working with a hardware partner that is both flexible and on the cutting edge.

Equus Compute Solutions is a partner with extensive AI hardware expertise. We understand the impact computing power has on your deep learning efficiency, and we work to provide the best solution for your actual needs. Our services are designed to support you and your hardware through maintenance, servicing, and beyond. Learn more about our AI hardware solutions by talking with an advisor today.